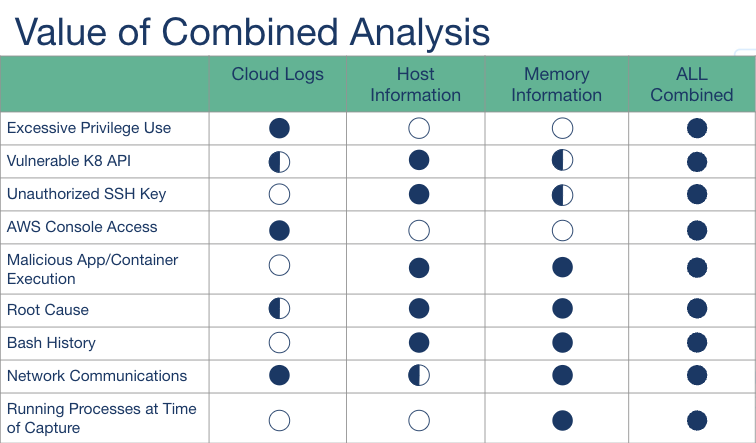

There have been many conversations among the security community about whether cloud forensics is simply just log analysis. While logs undoubtedly hold vital information, the depth of data required for effective cloud forensics extends far beyond just log analysis alone. In reality, the more data sources you can analyze in aggregate, the better your investigation will be.

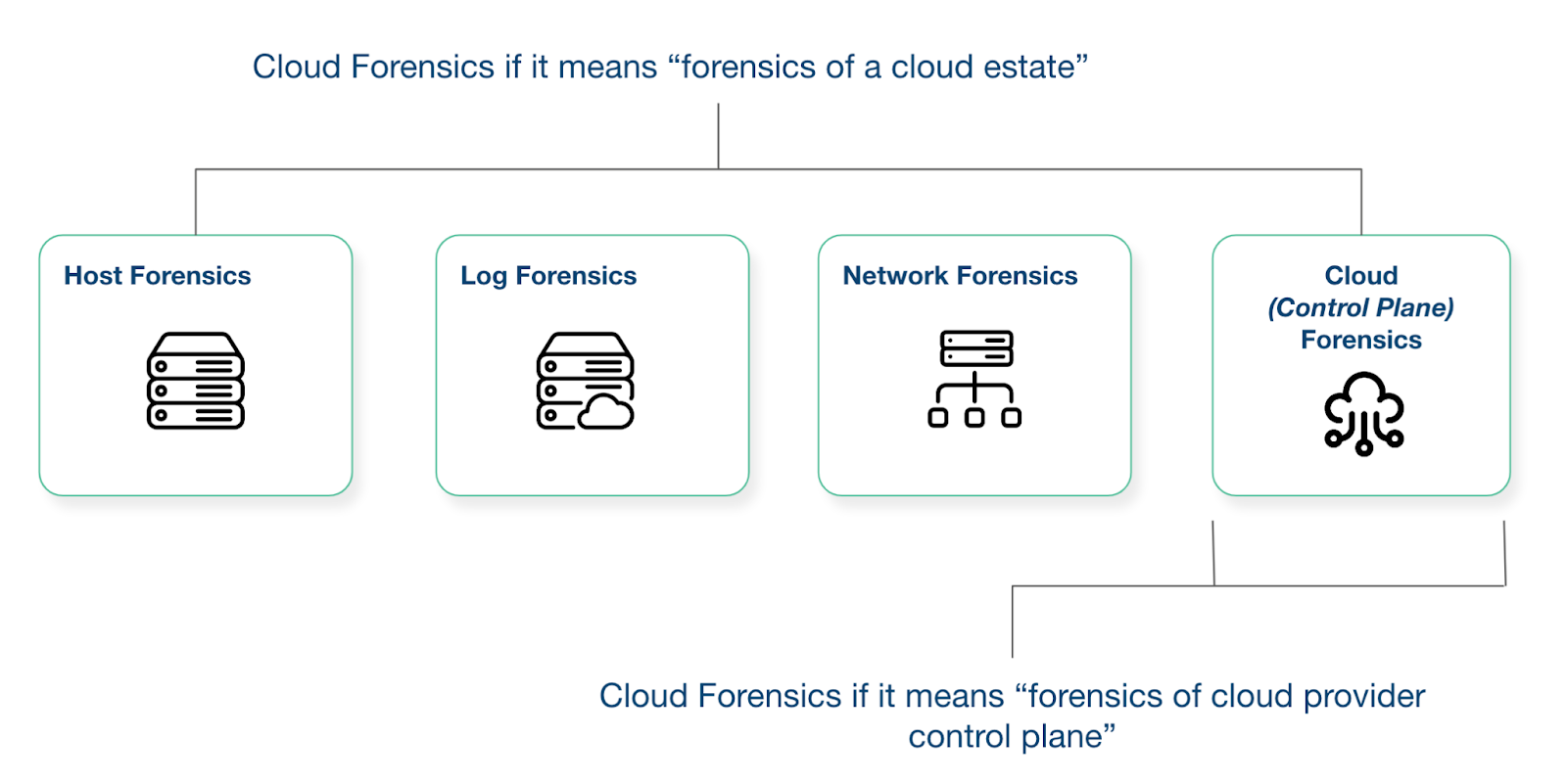

Full cloud forensics vs cloud control plane forensics

The Crucial Need for "Full Content” Data

In addition to cloud-provider logs, "full content” data is critical to a forensics investigation. This data spans across disk, network, and memory within the cloud infrastructure, serving as critical context required to identify the root cause and scope of an incident. For example, when investigating an incident involving a Kubernetes cluster running on an EC2 instance, the bash history is not available through cloud logs. Without the host and memory information from the instance, there is no way to see what bash commands were run by the attacker.

An additional benefit of host-based analysis is the historical context this provides to security teams. In many cases, having the ability to analyze important events since the time a system was spun up is critical to understanding the true scope of what happened. It’s also extremely critical in scenarios when no other visibility and detection tools were previously deployed on the system prior to an incident occurring.

By combining cloud logs, host, and memory information, security teams gain enhanced visibility and context to identify the root cause, scope, and impact of compromises.

Navigating Complexities: Attaining Depth in Complex Cloud Environments

Accessing diverse data sources in modern cloud environments without adequate tools proves to be a labyrinth. Here are some top challenges security teams face when gaining access to the depth of data they need in order to perform cloud forensics and incident response.

Access is managed by a cloud team

In many enterprises, access to cloud resources is owned by the cloud team. This means that every time an analyst wants to grab a full disk, they need to work through a different part of the organization, often manually opening a ticket to request access. This process can delay the start of an investigation by days or even weeks.

Multi cloud is the new normal

More and more companies are moving to a Multi cloud environment for numerous reasons, but when it comes to incident response, having your data live across multiple clouds can make getting to the bottom of what happened a daunting task and it can be even worse if an attack spans multiple cloud service providers.

The dynamic nature of ephemeral resources

The rise of ephemeral resources, such as container-based and serverless architectures, can make getting the data you need for an investigation nearly impossible. These transient resources, continuously spinning up and down, pose a significant hurdle for security teams. If malicious activity occurs between the time a resource is spun up and down, crucial evidence is lost forever, making an investigation impossible.

Cloud log default configurations

A very important note is that not all cloud logs are enabled by default, so it’s critical to assess this and make sure you will have access to the data you need for an investigation. For example, in GCP, Data Access Audit Logs are disabled for all servers except Bigquery by default in order to maintain a good overall audit configuration. All three types of Data Access audit logs (ADMIN_READ, DATA_READ and DATA_WRITE) should be enabled for the GCP services that are being used.

How Cado can Help

Cado Security streamlines cloud forensics investigations by addressing the key challenges discussed above. The Cado platform is designed to offer capabilities that simplify and expedite data collection and analysis in cloud environments through automation. Here are some of the key capabilities Cado offers:

Automated data collection: The Cado platform delivers the ability to fully automate the process of capturing critical forensics evidence. This approach enables security teams to drastically reduce the time it takes to kick off an investigation. It’s also critical in the context of investigating ephemeral resources, where data can disappear in the blink of an eye.

Depth of data: Cado automatically collects hundreds of data sources across cloud provider logs, disk, memory and more. This data is then enriched using both third party and proprietary threat intelligence to surface actionable insights including incident root cause and impacted assets and roles.

Unified data analysis: A unified data view is critical to enabling security teams to more easily consume evidence captured across multiple cloud platforms, services, and data sources. By having access to a single timeline, security teams can seamlessly dive into important data and quickly pivot their investigation based on key events.

Multi-cloud support: Cado supports a wide range of cloud servers, storage, container, and serverless options across the three top Cloud Service Providers (CSPs): AWS, Azure, and GCP. The platform automatically presents data captured across multiple cloud platforms in a single view, streamlining investigations in multi-cloud environments.

If you want to See how Cado enables cloud investigations, contact our team to schedule a demo.

More from the blog

View All PostsForensics or Fauxrensics? 5 Core Capabilities for Cloud Forensics and Incident Response

February 23, 2024Your Questions Answered: Cloud & Kubernetes Memory Forensics

September 7, 2021From Data Capture to Analysis: How Cado Simplifies Cloud Investigations

January 16, 2025

Subscribe to Our Blog

To stay up to date on the latest from Cado Security, subscribe to our blog today.